In information technology and software development, how many of our wounds are self-inflicted?

Here’s what I mean.

What I’ve seen happen, recurringly, in IT over the years and decades: idealistic but inexperienced people come along (within IT itself or in other departments within a company), to whom IT systems and issues seem to be easier than they in fact are. They are smart and earnest and oh-so-self-assured, but they also seem blissfully unburdened by much real understanding of past approaches.

They are dismissive of the need for much (if any) rigor. They generalize often quite broadly from very limited experience. Most notably, they fail to understand that what may be effective for an individual or even a small group of developers often doesn’t translate into working well for a team of any size. And, alas, there’s usually a whole host of consultants, book authors, and conference presenters who are willing and eager to feed their idealistic simplifications.

Over the years, I’ve seen a subset of developers in particular argue vehemently against any number of prudent and long-accepted IT practices: variously, things like source code control, scripted builds, reuse of code, and many others. (Oh, yes, and the use of estimates. Or, planning in general.) To be sure: it’s not just developers; we see seasoned industry analysts, for reputable firms, actually proposing that to get better quality, you need to “fire your QA team.” And actually getting applauded by many for “out of the box thinking.” Self-inflicted wounds.

But let’s talk about just one of these “throw out the long-used practice” memes that pops up regularly: dismissing the value of bug tracking.

How can anyone argue against tracking bugs? Unbelievably, they do, and vigorously.

A number of years back, I came into a struggling social networking company as their first CTO. Among other issues, I discovered that the dev team had basically stopped tracking bugs a year or two before, and were proud of that. Did that mean their software had no bugs? Not when I talked to the business stakeholders, who lamented that nearly every system was already bug-riddled, and getting worse by the release.

Why did the development team shun bug tracking? That wasn’t quite so clear.

They told me dismissively that Bugzilla (their former, now-mothballed bug tracking tool of choice) had just “filled up with a lot of text”. They cited all sorts of idealistic Agile justifications: if you really want to address a bug, you should do it right away, they opined, otherwise it’s not really important anyway. Index cards make the bug more visible than burying it in a tracking system. And so on. They showed me with great pride how they’d set up a light to turn green when all the automated tests passed. And hey, the light was green. In short, they were in deep denial about the proliferation of bugs. And no tracking meant no real data existed to contradict that claim, just people’s anecdotal impressions and vocal complaints.

But then, looking around the team’s work area, I found hundreds of index cards for various bugs, some of them many months old, randomly scattered in piles behind various workstations, scribbled with cryptic notes. No one knew for sure which bugs had been fixed, worked on, dismissed, etc. Least of all the stakeholders. And the end customers complained endlessly about bugs to the company’s customer care representatives, who then felt that no one internal really listened to them as the “voice of the customer.”

Recurring, self-inflicted wounds

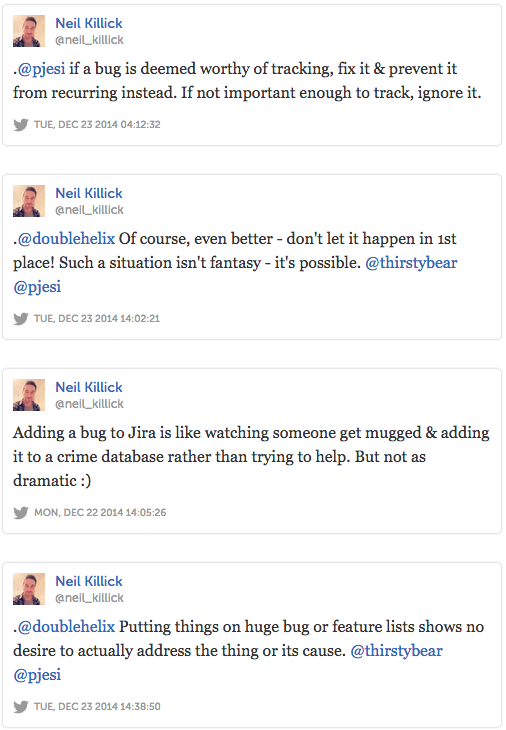

So it’s déjà vu for me when I read extreme, wrong-headed tweets like this:

This isn’t just a one-individual disconnect or blind spot. It’s distressingly common among software developers and (often) their management: the mistaken but dogged belief that we’ll find all bugs, and we’ll just fix them as we find them. But that simply doesn’t happen on non-trivial systems or projects. As Joel Spolsky noted, “programmers consistently seem to believe … that they can remember all their bugs or keep them on post-it notes.” But developers can’t, and they don’t. And anyone who has worked in a development shop of any reasonable size knows that developers can’t, and that even if they could, there are numerous compelling reasons to more formally track bugs anyway.

Half an hour of surfing (see the Lagniappe below) and a little brainstorming will get you a list of powerful reasons to do bug tracking more formally than using scattered index cards:

What you lose

Here are just a few of the things a team loses when there’s no use of bug tracking software:

- Information is lacking that is needed for troubleshooting the issue and confirming a fix. Index cards rarely contain the full scenario needed to understand and resolve the bug: specifically, the detailed steps to reproduce it, the expected result, and the actual result. Instead, you tend to get scribbled notes like “the footer sometimes gets wonky in the checkout module”.

- Other than leaning on “tribal knowledge,” (essentially, talking to individual heroes and gurus on the team), developers have to start from scratch every time an issue comes in. They can’t easily leverage what’s already been solved.

- With just “tribal knowledge” and no searchable database, it’s hard to tell if a newly reported issue is a known problem in an unusual guise, or a completely new problem.

- It’s difficult, lacking a historical database of bugs, to identify a pattern in the bugs that might suggest a more holistic fix than just addressing each individual bug.

- Lacking a searchable database of bugs and the ensuing record of discovery/research on them, it’s much more difficult to transfer responsibility for a given bug from one developer to another.

- Users have no clear process for ensuring or confirming that their problem has been recorded.

- Users noticing software issues can’t easily look to see if those issues have already been reported.

- In any normal business environment, people tend to submit issues multiple ways: phone, email, paper, even a hallway conversation. When those issues are not consolidated in one place, it becomes remarkably easy for issues to fall off the radar.

- Having no system of record for problems worsens business relations and causes randomizing of the team: in particular, submitters tend to repeatedly request personal updates from someone they know.

- With no central place for updates, managers can’t get much of the overview they need to make tactical and strategic decisions. So instead, they can spend a lot of time tracking down individuals to gauge where things stand.

- Other than shuffling laboriously through a stack of cards, no one can easily understand how many known bugs are left to fix, what the severity of those bugs is, etc.

- Release readiness evaluations can’t take into account the rate of bug discovery, which can be key to deciding if the software is truly launch-worthy.

- A scribbled index card can’t be easily consulted later to find out what was the solution.

And to reject all these solid reasons (and yes, there are lots more) out of hand? Or to even opine, as one vocal Twitter poster actually did on this topic, that “memory is waste“? Well, there goes IT again, with another arrogant, amnesiac, self-inflicted wound. Bug tracking as a software development practice is tried and true, with far more upside than downside (in short, not all overhead is waste, contrary to what you’ll be told). Yet some firms inexplicably allow teams to reject bug tracking nonetheless, and that (i.e., misguided management) is the problem. In fact, as cited here,

“Much of the blame for [software project] failures [lies] on management shortcomings, in particular the failure of both customers and suppliers to follow known best practice. In IT, best practice is rarely practiced. Projects are often poorly defined, codes of practice are frequently ignored and there is a woeful inability to learn from past experience’.”

Bottom line: when you hear people dismissing the need for various long-standing, solidly understood processes in the development of software, it’s appropriate to be tremendously skeptical. Unless you want to be part of self-inflicting the next wound.

Lagniappe:

- IssueTrak, “Top 10 Reasons Why Your Organization Needs Issue Tracking Software”

- Sifter, “Why Use Bug and Issue Tracking?”

- Wikipedia, “Bug Tracking System”

- Joel Spolsky, “Painless Bug Tracking”, November 8, 2000

- neilj, “Why I Don’t Use Bug Tracking Software”, April 7, 2010.

- neilj, “Why I Still Don’t Use Bug Tracking Software”, April 8, 2010.

I love the 3rd tweet comparing tracking bugs to adding a mugging to a crime database rather than trying to help. First of all, I suppose he’s ignorant of the concept of ‘and’, a powerful idea that allows for more than one response to a situation. He also seems to be unaware that the tracking of crimes (and bugs) is beneficial in that it can give insight into areas that are more dangerous than others, allowing preventative action to be taken.

I’d say “amazing”, but sadly, that type of myopia is anything but.

Agreed. It’s unclear to me, though, what drives the resistance, besides a misplaced idealism of sorts, an odd belief that you have to deal with a bug NOW, no matter what. It’s kind of like thinking that you have to answer every email as soon as it comes in.

The case for bug tracking is really crystal clear to anyone with a scintilla of development experience beyond the tiny-team level. Or so one would think.

Thanks for commenting, Gene.

These notions of Neil appear to come from a domain where “mission critical” is not present. As the Program Governance Director of a health care enrollment provider, “bugs” are the source of profit margin erosion, SLA non-compliance, wasted staff effort, and other numerous dysfunctions to efficient work process.

It seems Neil’s solution is to not manage the work demands for the staff, rather to “let it roll.”

This is willful ignorance of the basics of business and process management in all business domains outside of a sole contributor. Which would indicate that Neil’s domain does not include the spending and management of other people’s money.

Since I learned about XP in 2000, the general advice has been to not track any defects related to stories that are in the process of being built, beyond some simple system like extra sticky notes. In those cases, the story simply isn’t “done”. If defects are found outside of story development, then by all means track them in a manner appropriate to your domain.

Another infrequently mentioned practice that I learned from the original XP books is to perform root cause analysis on every defect. Identify what defect, characterize its nature, identify any other places in the system that the same sort of defect could occur, and finally identify how to make it impossible for the defect to occur again. This can be applied easily even outside of life/safety/mission critical systems, and in my experience is much more effective than a searchable database of defects.

Again, though, as Glen pointed out, the domain in which you’re working may not allow you to get away without a defect database. I’d still suggest that, if you’re using stories, defects representing incomplete stories discovered within the same iteration don’t necessarily need to be tracked. They simply represent an incomplete story.

Thanks for commenting, Dave. You bring up a worthwhile point: should original development of a story be an exception case, in that no defect tracking is needed or useful. You clearly argue that it should be, since it just represents development in progress. I fall much more along the lines of it being a judgment call, and I unfortunately often see developers err in that judgment, towards the side of lack of tracking, along with its evil twin, lack of transparency.

There’s obviously (to me, anyway) a juncture in the act of originally building a particular story where a bug matters and should be tracked outside “the mind of the developer”. I’m not sure if I could give a “one size fits all” definition of that juncture point, though, yet I’m not comfortable with saying “never”. Definitely worth discussion, as I said.

As for your second paragraph, I have to say my reaction is “if only”. Sure, analyze each defect and figure out how to make it impossible to have it occur again, anywhere, anytime. I can’t oppose that as theory/idealism, but really: it sometimes/often isn’t all that easy. I’m a huge proponent of adopting TDD to help in this (and other) respects, but that’s not infallible either. In any case, at no point would I advise shrugging off the need for a searchable database of defects simply because we have somehow convinced ourselves our practices are SO rock solid (across all members of the team past, present and future) that we will always manage to completely eradicate any bug we find, in any future incarnation whatsoever. Again, please consult the discussion in my post about the many advantages that a formalized record of defects brings you.

I appreciate the thoughtful comment.

The “no bug tracking software needed” is easily misunderstood and misapplied by some. In order to do this successfully, you must first have a development process that reduces the number of known bugs down to a level where you don’t *need* to keep a list of them.

You might quite reasonably think that this is unrealistic or impossible, based on your experience.

But other people have experienced success with this. I was working at a place (that is not at all unique in my experience) where any bug that is found must be fixed at the start of the next two week “sprint,” before any paying work. And this is required by the contract. And not a problem. It was usually several months between finding bugs. And it was not a trivial system: We had a team of around half a dozen developers working full time, for over seven years, building and adding to a production business system that was live in production for nearly that whole time. I don’t know of any time where we had more than one known bug, for the whole system, waiting to be fixed, at any given time.

When your bug counts are extremely low, and your software is so highly maintainable that most bugs are easy to fix, you can easily get to the point of asking why one should bother waiting for weeks or months before fixing a bug. Why not just fix it, and do away with the annoyance. If you can get to the point where it takes more man-hours to have a meeting to talk about prioritizing a bug than it takes to fix it, maybe it makes sense to just fix it.

Your customers paid to have functionality that works correctly. When we find that we have bugs, we know that we have failed to do that. Don’t our customers deserve to have a product that works correctly? Isn’t that what they paid for? And if we can do that without excessive cost, indeed with improved productivity, why wouldn’t we want to do that?

But, of course, it’s not an easy thing to accomplish.

It requires a radical change of mind-set, and fundamental changes to the way that we approach the work. Many people have had a hard time achieving it.